Descripción basic del contenido

- Configuración

- Configurar el modelo RESNET-18 para el conjunto de datos CIFAR-10

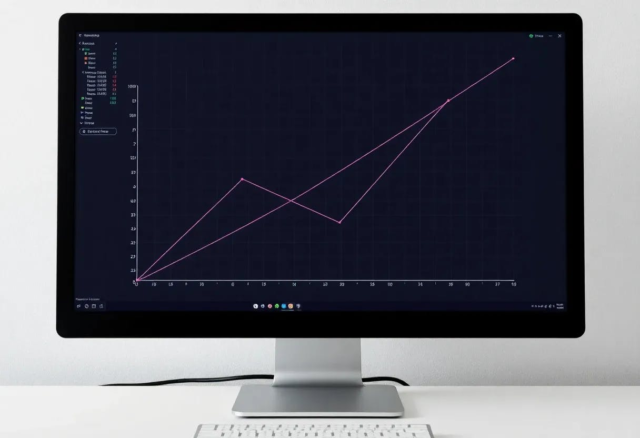

- Visualizar el modelo de entrenamiento

- Visualizar el modelo de prueba

- Entrenar y evaluar

- Exportar un mannequin salvado

Este tutorial ajusta una crimson residual (resnet) del paquete de jardín del modelo TensorFlow (tensorflow-models) para clasificar las imágenes en el conjunto de datos CIFAR.

Mannequin Backyard contiene una colección de modelos de visión de última generación, implementados con las API de alto nivel de Tensorflow. Las implementaciones demuestran las mejores prácticas para el modelado, permitiendo que los usuarios aprovechen al máximo el flujo de tensor para su investigación y desarrollo de productos.

Este tutorial utiliza un modelo ResNet, un clasificador de imagen de última generación. Este tutorial utiliza el modelo ResNet-18, una crimson neuronal convolucional con 18 capas.

Este tutorial demuestra cómo:

- Use modelos del paquete de modelos TensorFlow.

- Tune Fino un resnet pre-construido para la clasificación de imágenes.

- Exportar el modelo de resnet sintonizado.

Configuración

Instale e importe los módulos necesarios.

pip set up -U -q "tf-models-official"

Importar TensorFlow, TensorFlow DataSets y algunas bibliotecas auxiliares.

import pprint

import tempfile

from IPython import show

import matplotlib.pyplot as plt

import tensorflow as tf

import tensorflow_datasets as tfds

2023-10-17 11:52:54.005237: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN manufacturing unit: Trying to register manufacturing unit for plugin cuDNN when one has already been registered

2023-10-17 11:52:54.005294: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT manufacturing unit: Trying to register manufacturing unit for plugin cuFFT when one has already been registered

2023-10-17 11:52:54.005338: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS manufacturing unit: Trying to register manufacturing unit for plugin cuBLAS when one has already been registered

El tensorflow_models El paquete contiene el modelo de visión resnet y el official.imaginative and prescient.serving El modelo contiene la función para guardar y exportar el modelo sintonizado.

import tensorflow_models as tfm

# These are usually not within the tfm public API for v2.9. They are going to be obtainable in v2.10

from official.imaginative and prescient.serving import export_saved_model_lib

import official.core.train_lib

Configurar el modelo RESNET-18 para el conjunto de datos CIFAR-10

El conjunto de datos CIFAR10 contiene 60,000 imágenes en shade en 10 clases mutuamente excluyentes, con 6,000 imágenes en cada clase.

En Mannequin Backyard, las colecciones de parámetros que definen un modelo se llaman configuraciones. Mannequin Backyard puede crear una configuración basada en un conjunto conocido de parámetros a través de una fábrica.

Usar el resnet_imagenet Configuración de fábrica, según lo definido por tfm.imaginative and prescient.configs.image_classification.image_classification_imagenet. La configuración está configurada para Prepare ResNet para converger en ImageNet.

exp_config = tfm.core.exp_factory.get_exp_config('resnet_imagenet')

tfds_name = 'cifar10'

ds,ds_info = tfds.load(

tfds_name,

with_info=True)

ds_info

2023-10-17 11:52:59.285390: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Can not dlopen some GPU libraries. Please be certain the lacking libraries talked about above are put in correctly if you want to make use of GPU. Observe the information at for the way to obtain and setup the required libraries to your platform.

Skipping registering GPU units...

tfds.core.DatasetInfo(

title='cifar10',

full_name='cifar10/3.0.2',

description="""

The CIFAR-10 dataset consists of 60000 32x32 color photographs in 10 courses, with 6000 photographs per class. There are 50000 coaching photographs and 10000 take a look at photographs.

""",

homepage='

data_dir='gs://tensorflow-datasets/datasets/cifar10/3.0.2',

file_format=tfrecord,

download_size=162.17 MiB,

dataset_size=132.40 MiB,

options=FeaturesDict({

'id': Textual content(form=(), dtype=string),

'picture': Picture(form=(32, 32, 3), dtype=uint8),

'label': ClassLabel(form=(), dtype=int64, num_classes=10),

}),

supervised_keys=('picture', 'label'),

disable_shuffling=False,

splits={

'take a look at': ,

'practice': ,

},

quotation="""@TECHREPORT{Krizhevsky09learningmultiple,

creator = {Alex Krizhevsky},

title = {Studying a number of layers of options from tiny photographs},

establishment = {},

yr = {2009}

}""",

)

Ajuste el modelo y las configuraciones del conjunto de datos para que funcione con CIFAR-10 (cifar10).

# Configure mannequin

exp_config.activity.mannequin.num_classes = 10

exp_config.activity.mannequin.input_size = record(ds_info.options["image"].form)

exp_config.activity.mannequin.spine.resnet.model_id = 18

# Configure coaching and testing information

batch_size = 128

exp_config.activity.train_data.input_path = ''

exp_config.activity.train_data.tfds_name = tfds_name

exp_config.activity.train_data.tfds_split = 'practice'

exp_config.activity.train_data.global_batch_size = batch_size

exp_config.activity.validation_data.input_path = ''

exp_config.activity.validation_data.tfds_name = tfds_name

exp_config.activity.validation_data.tfds_split = 'take a look at'

exp_config.activity.validation_data.global_batch_size = batch_size

Ajuste la configuración del entrenador.

logical_device_names = [logical_device.name for logical_device in tf.config.list_logical_devices()]

if 'GPU' in ''.be part of(logical_device_names):

print('This can be damaged in Colab.')

gadget = 'GPU'

elif 'TPU' in ''.be part of(logical_device_names):

print('This can be damaged in Colab.')

gadget = 'TPU'

else:

print('Working on CPU is sluggish, so solely practice for a couple of steps.')

gadget = 'CPU'

if gadget=='CPU':

train_steps = 20

exp_config.coach.steps_per_loop = 5

else:

train_steps=5000

exp_config.coach.steps_per_loop = 100

exp_config.coach.summary_interval = 100

exp_config.coach.checkpoint_interval = train_steps

exp_config.coach.validation_interval = 1000

exp_config.coach.validation_steps = ds_info.splits['test'].num_examples // batch_size

exp_config.coach.train_steps = train_steps

exp_config.coach.optimizer_config.learning_rate.sort = 'cosine'

exp_config.coach.optimizer_config.learning_rate.cosine.decay_steps = train_steps

exp_config.coach.optimizer_config.learning_rate.cosine.initial_learning_rate = 0.1

exp_config.coach.optimizer_config.warmup.linear.warmup_steps = 100

Working on CPU is sluggish, so solely practice for a couple of steps.

Imprima la configuración modificada.

pprint.pprint(exp_config.as_dict())

show.Javascript("google.colab.output.setIframeHeight('300px');")

{'runtime': {'all_reduce_alg': None,

'batchnorm_spatial_persistent': False,

'dataset_num_private_threads': None,

'default_shard_dim': -1,

'distribution_strategy': 'mirrored',

'enable_xla': True,

'gpu_thread_mode': None,

'loss_scale': None,

'mixed_precision_dtype': None,

'num_cores_per_replica': 1,

'num_gpus': 0,

'num_packs': 1,

'per_gpu_thread_count': 0,

'run_eagerly': False,

'task_index': -1,

'tpu': None,

'tpu_enable_xla_dynamic_padder': None,

'use_tpu_mp_strategy': False,

'worker_hosts': None},

'activity': {'allow_image_summary': False,

'differential_privacy_config': None,

'eval_input_partition_dims': [],

'analysis': {'precision_and_recall_thresholds': None,

'report_per_class_precision_and_recall': False,

'top_k': 5},

'freeze_backbone': False,

'init_checkpoint': None,

'init_checkpoint_modules': 'all',

'losses': {'l2_weight_decay': 0.0001,

'label_smoothing': 0.0,

'loss_weight': 1.0,

'one_hot': True,

'soft_labels': False,

'use_binary_cross_entropy': False},

'mannequin': {'add_head_batch_norm': False,

'spine': {'resnet': {'bn_trainable': True,

'depth_multiplier': 1.0,

'model_id': 18,

'replace_stem_max_pool': False,

'resnetd_shortcut': False,

'scale_stem': True,

'se_ratio': 0.0,

'stem_type': 'v0',

'stochastic_depth_drop_rate': 0.0},

'sort': 'resnet'},

'dropout_rate': 0.0,

'input_size': [32, 32, 3],

'kernel_initializer': 'random_uniform',

'norm_activation': {'activation': 'relu',

'norm_epsilon': 1e-05,

'norm_momentum': 0.9,

'use_sync_bn': False},

'num_classes': 10,

'output_softmax': False},

'model_output_keys': [],

'title': None,

'train_data': {'apply_tf_data_service_before_batching': False,

'aug_crop': True,

'aug_policy': None,

'aug_rand_hflip': True,

'aug_type': None,

'autotune_algorithm': None,

'block_length': 1,

'cache': False,

'center_crop_fraction': 0.875,

'color_jitter': 0.0,

'crop_area_range': (0.08, 1.0),

'cycle_length': 10,

'decode_jpeg_only': True,

'decoder': {'simple_decoder': {'attribute_names': [],

'mask_binarize_threshold': None,

'regenerate_source_id': False},

'sort': 'simple_decoder'},

'deterministic': None,

'drop_remainder': True,

'dtype': 'float32',

'enable_shared_tf_data_service_between_parallel_trainers': False,

'enable_tf_data_service': False,

'file_type': 'tfrecord',

'global_batch_size': 128,

'image_field_key': 'picture/encoded',

'input_path': '',

'is_multilabel': False,

'is_training': True,

'label_field_key': 'picture/class/label',

'mixup_and_cutmix': None,

'prefetch_buffer_size': None,

'randaug_magnitude': 10,

'random_erasing': None,

'repeated_augment': None,

'seed': None,

'sharding': True,

'shuffle_buffer_size': 10000,

'tf_data_service_address': None,

'tf_data_service_job_name': None,

'tf_resize_method': 'bilinear',

'tfds_as_supervised': False,

'tfds_data_dir': '',

'tfds_name': 'cifar10',

'tfds_skip_decoding_feature': '',

'tfds_split': 'practice',

'three_augment': False,

'trainer_id': None,

'weights': None},

'train_input_partition_dims': [],

'validation_data': {'apply_tf_data_service_before_batching': False,

'aug_crop': True,

'aug_policy': None,

'aug_rand_hflip': True,

'aug_type': None,

'autotune_algorithm': None,

'block_length': 1,

'cache': False,

'center_crop_fraction': 0.875,

'color_jitter': 0.0,

'crop_area_range': (0.08, 1.0),

'cycle_length': 10,

'decode_jpeg_only': True,

'decoder': {'simple_decoder': {'attribute_names': [],

'mask_binarize_threshold': None,

'regenerate_source_id': False},

'sort': 'simple_decoder'},

'deterministic': None,

'drop_remainder': True,

'dtype': 'float32',

'enable_shared_tf_data_service_between_parallel_trainers': False,

'enable_tf_data_service': False,

'file_type': 'tfrecord',

'global_batch_size': 128,

'image_field_key': 'picture/encoded',

'input_path': '',

'is_multilabel': False,

'is_training': False,

'label_field_key': 'picture/class/label',

'mixup_and_cutmix': None,

'prefetch_buffer_size': None,

'randaug_magnitude': 10,

'random_erasing': None,

'repeated_augment': None,

'seed': None,

'sharding': True,

'shuffle_buffer_size': 10000,

'tf_data_service_address': None,

'tf_data_service_job_name': None,

'tf_resize_method': 'bilinear',

'tfds_as_supervised': False,

'tfds_data_dir': '',

'tfds_name': 'cifar10',

'tfds_skip_decoding_feature': '',

'tfds_split': 'take a look at',

'three_augment': False,

'trainer_id': None,

'weights': None} },

'coach': {'allow_tpu_summary': False,

'best_checkpoint_eval_metric': '',

'best_checkpoint_export_subdir': '',

'best_checkpoint_metric_comp': 'larger',

'checkpoint_interval': 20,

'continuous_eval_timeout': 3600,

'eval_tf_function': True,

'eval_tf_while_loop': False,

'loss_upper_bound': 1000000.0,

'max_to_keep': 5,

'optimizer_config': {'ema': None,

'learning_rate': {'cosine': {'alpha': 0.0,

'decay_steps': 20,

'initial_learning_rate': 0.1,

'title': 'CosineDecay',

'offset': 0},

'sort': 'cosine'},

'optimizer': {'sgd': {'clipnorm': None,

'clipvalue': None,

'decay': 0.0,

'global_clipnorm': None,

'momentum': 0.9,

'title': 'SGD',

'nesterov': False},

'sort': 'sgd'},

'warmup': {'linear': {'title': 'linear',

'warmup_learning_rate': 0,

'warmup_steps': 100},

'sort': 'linear'} },

'preemption_on_demand_checkpoint': True,

'recovery_begin_steps': 0,

'recovery_max_trials': 0,

'steps_per_loop': 5,

'summary_interval': 100,

'train_steps': 20,

'train_tf_function': True,

'train_tf_while_loop': True,

'validation_interval': 1000,

'validation_steps': 78,

'validation_summary_subdir': 'validation'} }

Configure la estrategia de distribución.

logical_device_names = [logical_device.name for logical_device in tf.config.list_logical_devices()]

if exp_config.runtime.mixed_precision_dtype == tf.float16:

tf.keras.mixed_precision.set_global_policy('mixed_float16')

if 'GPU' in ''.be part of(logical_device_names):

distribution_strategy = tf.distribute.MirroredStrategy()

elif 'TPU' in ''.be part of(logical_device_names):

tf.tpu.experimental.initialize_tpu_system()

tpu = tf.distribute.cluster_resolver.TPUClusterResolver(tpu='/gadget:TPU_SYSTEM:0')

distribution_strategy = tf.distribute.experimental.TPUStrategy(tpu)

else:

print('Warning: this can be actually sluggish.')

distribution_strategy = tf.distribute.OneDeviceStrategy(logical_device_names[0])

Warning: this can be actually sluggish.

Crear el Process objeto (tfm.core.base_task.Process) de la config_definitions.TaskConfig.

El Process El objeto tiene todos los métodos necesarios para construir el conjunto de datos, construir el modelo y ejecutar capacitación y evaluación. Estos métodos son impulsados por tfm.core.train_lib.run_experiment.

with distribution_strategy.scope():

model_dir = tempfile.mkdtemp()

activity = tfm.core.task_factory.get_task(exp_config.activity, logging_dir=model_dir)

# tf.keras.utils.plot_model(activity.build_model(), show_shapes=True)

for photographs, labels in activity.build_inputs(exp_config.activity.train_data).take(1):

print()

print(f'photographs.form: {str(photographs.form):16} photographs.dtype: {photographs.dtype!r}')

print(f'labels.form: {str(labels.form):16} labels.dtype: {labels.dtype!r}')

photographs.form: (128, 32, 32, 3) photographs.dtype: tf.float32

labels.form: (128,) labels.dtype: tf.int32

2023-10-17 11:53:02.248801: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

Visualizar los datos de entrenamiento

El dataLoader aplica una normalización de puntaje Z utilizando preprocess_ops.normalize_image(picture, offset=MEAN_RGB, scale=STDDEV_RGB)por lo que las imágenes devueltas por el conjunto de datos no pueden mostrarse directamente por las herramientas estándar. El código de visualización debe rescalar los datos en el [0,1] rango.

plt.hist(photographs.numpy().flatten());

Usar ds_info (que es una instancia de tfds.core.DatasetInfo) Buscar las descripciones de texto de cada ID de clase.

label_info = ds_info.options['label']

label_info.int2str(1)

'vehicle'

Visualice un lote de los datos.

def show_batch(photographs, labels, predictions=None):

plt.determine(figsize=(10, 10))

min = photographs.numpy().min()

max = photographs.numpy().max()

delta = max - min

for i in vary(12):

plt.subplot(6, 6, i + 1)

plt.imshow((photographs[i]-min) / delta)

if predictions is None:

plt.title(label_info.int2str(labels[i]))

else:

if labels[i] == predictions[i]:

shade = 'g'

else:

shade = 'r'

plt.title(label_info.int2str(predictions[i]), shade=shade)

plt.axis("off")

plt.determine(figsize=(10, 10))

for photographs, labels in activity.build_inputs(exp_config.activity.train_data).take(1):

show_batch(photographs, labels)

2023-10-17 11:53:04.198417: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

Visualizar los datos de prueba

Visualice un lote de imágenes del conjunto de datos de validación.

plt.determine(figsize=(10, 10));

for photographs, labels in activity.build_inputs(exp_config.activity.validation_data).take(1):

show_batch(photographs, labels)

2023-10-17 11:53:07.007846: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

Entrenar y evaluar

mannequin, eval_logs = tfm.core.train_lib.run_experiment(

distribution_strategy=distribution_strategy,

activity=activity,

mode='train_and_eval',

params=exp_config,

model_dir=model_dir,

run_post_eval=True)

restoring or initializing mannequin...

INFO:tensorflow:Custom-made initialization is completed by the handed `init_fn`.

INFO:tensorflow:Custom-made initialization is completed by the handed `init_fn`.

practice | step: 0 | coaching till step 20...

2023-10-17 11:53:09.849007: W tensorflow/core/framework/dataset.cc:959] Enter of GeneratorDatasetOp::Dataset won't be optimized as a result of the dataset doesn't implement the AsGraphDefInternal() methodology wanted to use optimizations.

practice | step: 5 | steps/sec: 0.5 | output:

{'accuracy': 0.103125,

'learning_rate': 0.0,

'top_5_accuracy': 0.4828125,

'training_loss': 2.7998607}

saved checkpoint to /tmpfs/tmp/tmpu0ate1h5/ckpt-5.

practice | step: 10 | steps/sec: 0.8 | output:

{'accuracy': 0.0828125,

'learning_rate': 0.0,

'top_5_accuracy': 0.4984375,

'training_loss': 2.8205295}

practice | step: 15 | steps/sec: 0.8 | output:

{'accuracy': 0.0921875,

'learning_rate': 0.0,

'top_5_accuracy': 0.503125,

'training_loss': 2.8169343}

practice | step: 20 | steps/sec: 0.8 | output:

{'accuracy': 0.1015625,

'learning_rate': 0.0,

'top_5_accuracy': 0.45,

'training_loss': 2.8760865}

eval | step: 20 | operating 78 steps of analysis...

eval | step: 20 | steps/sec: 24.4 | eval time: 3.2 sec | output:

{'accuracy': 0.09485176,

'steps_per_second': 24.40085348913806,

'top_5_accuracy': 0.49589342,

'validation_loss': 2.5864375}

saved checkpoint to /tmpfs/tmp/tmpu0ate1h5/ckpt-20.

2023-10-17 11:53:43.844533: W tensorflow/core/common_runtime/gpu/gpu_device.cc:2211] Can not dlopen some GPU libraries. Please be certain the lacking libraries talked about above are put in correctly if you want to make use of GPU. Observe the information at for the way to obtain and setup the required libraries to your platform.

Skipping registering GPU units...

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/ops/nn_ops.py:5253: tensor_shape_from_node_def_name (from tensorflow.python.framework.graph_util_impl) is deprecated and can be eliminated in a future model.

Directions for updating:

This API was designed for TensorFlow v1. See for directions on the way to migrate your code to TensorFlow v2.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/ops/nn_ops.py:5253: tensor_shape_from_node_def_name (from tensorflow.python.framework.graph_util_impl) is deprecated and can be eliminated in a future model.

Directions for updating:

This API was designed for TensorFlow v1. See for directions on the way to migrate your code to TensorFlow v2.

eval | step: 20 | operating 78 steps of analysis...

2023-10-17 11:53:45.627213: W tensorflow/core/framework/dataset.cc:959] Enter of GeneratorDatasetOp::Dataset won't be optimized as a result of the dataset doesn't implement the AsGraphDefInternal() methodology wanted to use optimizations.

eval | step: 20 | steps/sec: 40.1 | eval time: 1.9 sec | output:

{'accuracy': 0.09485176,

'steps_per_second': 40.14298727815298,

'top_5_accuracy': 0.49589342,

'validation_loss': 2.5864375}

# tf.keras.utils.plot_model(mannequin, show_shapes=True)

Imprima el accuracy, top_5_accuracyy validation_loss Métricas de evaluación.

for key, worth in eval_logs.gadgets():

if isinstance(worth, tf.Tensor):

worth = worth.numpy()

print(f'{key:20}: {worth:.3f}')

accuracy : 0.095

top_5_accuracy : 0.496

validation_loss : 2.586

steps_per_second : 40.143

Ejecute un lote de los datos de capacitación procesados a través del modelo y vea los resultados

for photographs, labels in activity.build_inputs(exp_config.activity.train_data).take(1):

predictions = mannequin.predict(photographs)

predictions = tf.argmax(predictions, axis=-1)

show_batch(photographs, labels, tf.solid(predictions, tf.int32))

if gadget=='CPU':

plt.suptitle('The mannequin was solely skilled for a couple of steps, it's not anticipated to do nicely.')

2023-10-17 11:53:49.840600: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

4/4 [==============================] - 1s 13ms/step

2023-10-17 11:53:50.778301: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

Exportar un mannequin salvado

El keras.Mannequin objeto devuelto por train_lib.run_experiment espera que los datos se normalicen mediante el cargador del conjunto de datos utilizando la misma media y estademis de varianza en preprocess_ops.normalize_image(picture, offset=MEAN_RGB, scale=STDDEV_RGB). Esta función de exportación maneja esos detalles, para que pueda aprobar tf.uint8 imágenes y obtener los resultados correctos.

# Saving and exporting the skilled mannequin

export_saved_model_lib.export_inference_graph(

input_type='image_tensor',

batch_size=1,

input_image_size=[32, 32],

params=exp_config,

checkpoint_path=tf.practice.latest_checkpoint(model_dir),

export_dir='./export/')

INFO:tensorflow:Belongings written to: ./export/belongings

INFO:tensorflow:Belongings written to: ./export/belongings

Pruebe el modelo exportado.

# Importing SavedModel

imported = tf.saved_model.load('./export/')

model_fn = imported.signatures['serving_default']

Visualizar las predicciones.

plt.determine(figsize=(10, 10))

for information in tfds.load('cifar10', cut up='take a look at').batch(12).take(1):

predictions = []

for picture in information['image']:

index = tf.argmax(model_fn(picture[tf.newaxis, ...])['logits'], axis=1)[0]

predictions.append(index)

show_batch(information['image'], information['label'], predictions)

if gadget=='CPU':

plt.suptitle('The mannequin was solely skilled for a couple of steps, it's not anticipated to do higher than random.')

2023-10-17 11:54:01.438509: W tensorflow/core/kernels/information/cache_dataset_ops.cc:854] The calling iterator didn't absolutely learn the dataset being cached. With the intention to keep away from sudden truncation of the dataset, the partially cached contents of the dataset can be discarded. This will occur in case you have an enter pipeline much like `dataset.cache().take(okay).repeat()`. It is best to use `dataset.take(okay).cache().repeat()` as a substitute.

Publicado originalmente en el