Descripción basic del contenido

- Descripción basic

- Configuración

- Habilitando el comportamiento numpy

- Tensorflow numpy nd matriz

- Tipo de promoción

- Radiodifusión

- Indexación

- Modelo de ejemplo

- Tensorflow Numpy y Numpy

- Interoperabilidad numpy

- Copias de búfer

- Precedencia del operador

Descripción basic

TensorFlow implementa un subconjunto de la API Numpy, disponible como tf.experimental.numpy. Esto permite ejecutar el código Numpy, acelerado por TensorFlow, al tiempo que permite el acceso a todas las API de Tensorflow.

Configuración

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

import tensorflow.experimental.numpy as tnp

import timeit

print("Utilizing TensorFlow model %s" % tf.__version__)

2024-08-15 01:31:55.452313: E exterior/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT manufacturing facility: Trying to register manufacturing facility for plugin cuFFT when one has already been registered

2024-08-15 01:31:55.473711: E exterior/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN manufacturing facility: Trying to register manufacturing facility for plugin cuDNN when one has already been registered

2024-08-15 01:31:55.480014: E exterior/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS manufacturing facility: Trying to register manufacturing facility for plugin cuBLAS when one has already been registered

Utilizing TensorFlow model 2.17.0

Habilitando el comportamiento numpy

Para usar tnp Como Numpy, habilite el comportamiento numpy para TensorFlow:

tnp.experimental_enable_numpy_behavior()

Esta llamada permite la promoción de tipo en TensorFlow y también cambia de inferencia de tipo, al convertir literales a tensores, para seguir más estrictamente el estándar numpy.

Nota: Esta llamada cambiará el comportamiento de TensorFlow completo, no solo el tf.experimental.numpy módulo.

Tensorflow numpy nd matriz

Una instancia de tf.experimental.numpy.ndarrayllamado Matriz ndrepresenta una matriz densa multidimensional de un dado dtype colocado en un determinado dispositivo. Es un alias para tf.Tensor. Consulte la clase de matriz ND para obtener métodos útiles como ndarray.T, ndarray.reshape, ndarray.ravel y otros.

Primero cree un objeto de matriz ND y luego invoque diferentes métodos.

# Create an ND array and take a look at totally different attributes.

ones = tnp.ones([5, 3], dtype=tnp.float32)

print("Created ND array with form = %s, rank = %s, "

"dtype = %s on machine = %sn" % (

ones.form, ones.ndim, ones.dtype, ones.machine))

# `ndarray` is simply an alias to `tf.Tensor`.

print("Is `ones` an occasion of tf.Tensor: %sn" % isinstance(ones, tf.Tensor))

# Strive generally used member capabilities.

print("ndarray.T has form %s" % str(ones.T.form))

print("narray.reshape(-1) has form %s" % ones.reshape(-1).form)

Created ND array with form = (5, 3), rank = 2, dtype = on machine = /job:localhost/duplicate:0/activity:0/machine:GPU:0

Is `ones` an occasion of tf.Tensor: True

ndarray.T has form (3, 5)

narray.reshape(-1) has form (15,)

WARNING: All log messages earlier than absl::InitializeLog() is named are written to STDERR

I0000 00:00:1723685517.895102 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.898954 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.902591 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.906282 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.918004 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.921334 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.924792 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.928195 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.931648 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.935182 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.938672 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685517.942206 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.174780 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.176882 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.178882 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.180984 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.183497 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.185413 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.187391 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.189496 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.191405 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.193373 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.195387 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.197409 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.235845 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.237893 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.239848 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.241962 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.243923 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.245869 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.247783 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.249797 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.251701 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.254112 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.256530 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

I0000 00:00:1723685519.258985 23752 cuda_executor.cc:1015] profitable NUMA node learn from SysFS had adverse worth (-1), however there should be no less than one NUMA node, so returning NUMA node zero. See extra at

Tipo de promoción

Hay 4 opciones para la promoción de tipos en TensorFlow.

- Por defecto, TensorFlow aumenta los errores en lugar de promover tipos para operaciones de tipo mixto.

- Correr

tf.numpy.experimental_enable_numpy_behavior()Cambia de tensorflow para usarNumPyTipo Reglas de promoción (descrita a continuación). - Después de TensorFlow 2.15, hay dos nuevas opciones (consulte la promoción de tipo Numpy TF para más detalles):

tf.numpy.experimental_enable_numpy_behavior(dtype_conversion_mode="all")Utiliza reglas de promoción de tipo Jax.tf.numpy.experimental_enable_numpy_behavior(dtype_conversion_mode="secure")Utiliza reglas de promoción de tipo Jax, pero no permite ciertas promociones inseguras.

Promoción de tipo numpy

Las API Numpy TensorFlow tienen semántica bien definida para convertir literales en matriz ND, así como para realizar la promoción de tipo en las entradas de matriz ND. Por favor vea np.result_type Para más detalles.

API de tensorflow se va tf.Tensor Las entradas sin cambios y no realizan una promoción de tipo en ellas, mientras que las API numpy de TensorFlow promueven todas las entradas de acuerdo con las reglas de promoción de tipo Numpy. En el siguiente ejemplo, realizará la promoción de tipo. Primero, ejecute la adición en las entradas de matriz ND de diferentes tipos y anote los tipos de salida. Ninguna de estas promociones de tipo estaría permitida por las API TensorFlow.

print("Kind promotion for operations")

values = [tnp.asarray(1, dtype=d) for d in

(tnp.int32, tnp.int64, tnp.float32, tnp.float64)]

for i, v1 in enumerate(values):

for v2 in values[i + 1:]:

print("%s + %s => %s" %

(v1.dtype.identify, v2.dtype.identify, (v1 + v2).dtype.identify))

Kind promotion for operations

int32 + int64 => int64

int32 + float32 => float64

int32 + float64 => float64

int64 + float32 => float64

int64 + float64 => float64

float32 + float64 => float64

Finalmente, convierta los literales en matriz ND usando ndarray.asarray y tenga en cuenta el tipo resultante.

print("Kind inference throughout array creation")

print("tnp.asarray(1).dtype == tnp.%s" % tnp.asarray(1).dtype.identify)

print("tnp.asarray(1.).dtype == tnp.%sn" % tnp.asarray(1.).dtype.identify)

Kind inference throughout array creation

tnp.asarray(1).dtype == tnp.int64

tnp.asarray(1.).dtype == tnp.float64

Al convertir literales en una matriz ND, Numpy prefiere tipos amplios como tnp.int64 y tnp.float64. En contraste, tf.convert_to_tensor prefieren tf.int32 y tf.float32 tipos para convertir constantes en tf.Tensor. Las API numpy de TensorFlow se adhieren al comportamiento numpy para enteros. En cuanto a las carrozas, el prefer_float32 argumento de experimental_enable_numpy_behavior te permite controlar si preferir tf.float32 encima tf.float64 (predeterminado a False). Por ejemplo:

tnp.experimental_enable_numpy_behavior(prefer_float32=True)

print("When prefer_float32 is True:")

print("tnp.asarray(1.).dtype == tnp.%s" % tnp.asarray(1.).dtype.identify)

print("tnp.add(1., 2.).dtype == tnp.%s" % tnp.add(1., 2.).dtype.identify)

tnp.experimental_enable_numpy_behavior(prefer_float32=False)

print("When prefer_float32 is False:")

print("tnp.asarray(1.).dtype == tnp.%s" % tnp.asarray(1.).dtype.identify)

print("tnp.add(1., 2.).dtype == tnp.%s" % tnp.add(1., 2.).dtype.identify)

When prefer_float32 is True:

tnp.asarray(1.).dtype == tnp.float32

tnp.add(1., 2.).dtype == tnp.float32

When prefer_float32 is False:

tnp.asarray(1.).dtype == tnp.float64

tnp.add(1., 2.).dtype == tnp.float64

Radiodifusión

Related a TensorFlow, Numpy outline una rica semántica para los valores de “transmisión”. Puede consultar la guía de transmisión Numpy para obtener más información y compararla con la semántica de transmisión de TensorFlow.

x = tnp.ones([2, 3])

y = tnp.ones([3])

z = tnp.ones([1, 2, 1])

print("Broadcasting shapes %s, %s and %s offers form %s" % (

x.form, y.form, z.form, (x + y + z).form))

Broadcasting shapes (2, 3), (3,) and (1, 2, 1) offers form (1, 2, 3)

Indexación

Numpy outline reglas de indexación muy sofisticadas. Consulte la guía de indexación numpy. Tenga en cuenta el uso de matrices ND como índices a continuación.

x = tnp.arange(24).reshape(2, 3, 4)

print("Primary indexing")

print(x[1, tnp.newaxis, 1:3, ...], "n")

print("Boolean indexing")

print(x[:, (True, False, True)], "n")

print("Superior indexing")

print(x[1, (0, 0, 1), tnp.asarray([0, 1, 1])])

Primary indexing

tf.Tensor(

[[[16 17 18 19]

[20 21 22 23]]], form=(1, 2, 4), dtype=int64)

Boolean indexing

tf.Tensor(

[[[ 0 1 2 3]

[ 8 9 10 11]]

[[12 13 14 15]

[20 21 22 23]]], form=(2, 2, 4), dtype=int64)

Superior indexing

tf.Tensor([12 13 17], form=(3,), dtype=int64)

# Mutation is presently not supported

strive:

tnp.arange(6)[1] = -1

besides TypeError:

print("At present, TensorFlow NumPy doesn't help mutation.")

At present, TensorFlow NumPy doesn't help mutation.

Modelo de ejemplo

A continuación, puede ver cómo crear un modelo y ejecutar inferencia en él. Este modelo easy aplica una capa RELU seguida de una proyección lineal. Las secciones posteriores mostrarán cómo calcular los gradientes para este modelo utilizando TensorFlow’s GradientTape.

class Mannequin(object):

"""Mannequin with a dense and a linear layer."""

def __init__(self):

self.weights = None

def predict(self, inputs):

if self.weights is None:

measurement = inputs.form[1]

# Word that sort `tnp.float32` is used for efficiency.

stddev = tnp.sqrt(measurement).astype(tnp.float32)

w1 = tnp.random.randn(measurement, 64).astype(tnp.float32) / stddev

bias = tnp.random.randn(64).astype(tnp.float32)

w2 = tnp.random.randn(64, 2).astype(tnp.float32) / 8

self.weights = (w1, bias, w2)

else:

w1, bias, w2 = self.weights

y = tnp.matmul(inputs, w1) + bias

y = tnp.most(y, 0) # Relu

return tnp.matmul(y, w2) # Linear projection

mannequin = Mannequin()

# Create enter information and compute predictions.

print(mannequin.predict(tnp.ones([2, 32], dtype=tnp.float32)))

tf.Tensor(

[[-0.8292594 0.75780904]

[-0.8292594 0.75780904]], form=(2, 2), dtype=float32)

Tensorflow Numpy y Numpy

TensorFlow Numpy implementa un subconjunto de la especificación Numpy completa. Si bien se agregarán más símbolos con el tiempo, existen características sistemáticas que no serán compatibles en el futuro cercano. Estos incluyen soporte de API de Numpy C, integración SWIG, orden de almacenamiento de Fortran, vistas y stride_tricksy algunos dtypeS (como np.recarray y np.object). Para obtener más detalles, consulte la documentación de la API Numpy TensorFlow.

Interoperabilidad numpy

Las matrices TensorFlow ND pueden interoperar con funciones numpy. Estos objetos implementan el __array__ interfaz. Numpy utiliza esta interfaz para convertir los argumentos de la función en np.ndarray valores antes de procesarlos.

Del mismo modo, las funciones numpy tensorflow pueden aceptar entradas de diferentes tipos, incluidos np.ndarray. Estas entradas se convierten en una matriz ND llamando ndarray.asarray sobre ellos.

Conversión de la matriz ND hacia y desde np.ndarray puede activar copias de datos reales. Consulte la sección sobre copias de búfer para obtener más detalles.

# ND array handed into NumPy operate.

np_sum = np.sum(tnp.ones([2, 3]))

print("sum = %s. Class: %s" % (float(np_sum), np_sum.__class__))

# `np.ndarray` handed into TensorFlow NumPy operate.

tnp_sum = tnp.sum(np.ones([2, 3]))

print("sum = %s. Class: %s" % (float(tnp_sum), tnp_sum.__class__))

sum = 6.0. Class:

sum = 6.0. Class:

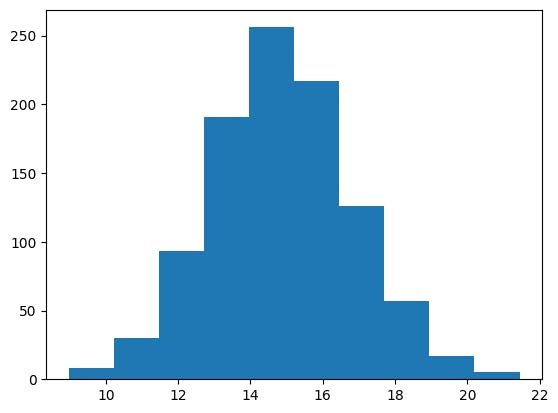

# It's straightforward to plot ND arrays, given the __array__ interface.

labels = 15 + 2 * tnp.random.randn(1, 1000)

_ = plt.hist(labels)

Copias de búfer

Intermixing TensorFlow Numpy con código Numpy puede activar copias de datos. Esto se debe a que TensorFlow Numpy tiene requisitos más estrictos en la alineación de la memoria que los de Numpy.

Cuando un np.ndarray se pasa a TensorFlow Numpy, verificará los requisitos de alineación y activará una copia si es necesario. Al pasar un búfer de CPU de matriz ND a Numpy, generalmente el búfer satisfará los requisitos de alineación y Numpy no necesitará crear una copia.

Las matrices ND pueden referirse a los búferes colocados en dispositivos distintos de la memoria native de la CPU. En tales casos, invocar una función Numpy activará copias en la purple o el dispositivo según sea necesario.

Dado esto, el intercambio de llamadas de API numpy generalmente debe hacerse con precaución y el usuario debe tener cuidado con los gastos generales de los datos de copia. Interelegar las llamadas de tensorflow numpy con llamadas de flujo tensor es generalmente seguro y evita copiar datos. Consulte la sección sobre interoperabilidad de TensorFlow para obtener más detalles.

Precedencia del operador

Tensorflow numpy outline un __array_priority__ más alto que los de Numpy. Esto significa que para los operadores que involucran tanto la matriz ND como para np.ndarrayel primero tendrá prioridad, es decir, np.ndarray La entrada se convertirá en una matriz ND y la implementación de TensorFlow Numpy del operador será invocada.

x = tnp.ones([2]) + np.ones([2])

print("x = %snclass = %s" % (x, x.__class__))

x = tf.Tensor([2. 2.], form=(2,), dtype=float64)

class =

Publicado originalmente en el